Implementing Laziness in C

Siddharth Bhat

15 Apr 2021

•

9 min read

The aim of this blog post is to explain haskell's (specifically, GHC) evaluation model without having to jump through too many hoops. I'll explain how pervasive non-strictness is when it comes to Haskell, and why compiling non-strictness is an interesting problem.

Showing off non-strictness:

We first need a toy example to work with to explain the fundamentals of

non-strict evaluation, so let's consider the example below.

I'll explain the case construct which is explained below.

Don't worry if ato lazy evaluation "looks weird",

it feels that way to everyone till one plays around with it for a while!

We will interpret this example as both a strict program and a non-strict program. This will show us that we obtain different outputs on applying different interpretations.

We distinguish between primitive values (integers such as 1, 2, 3) and boxed values

(functions, data structures). Boxed values can be evaluated non-stricly. Primitive values

do not need evaluation: they are primitive.

Code

-- Lines starting with a `--` are comments.

-- K is a function that takes two arguments, `x` and `y`, that are both boxed values.

-- K returns the first argument, `x`, ignoring the second argument, `y`.

-- Fun fact: K comes for the K combinator in lambda calculus.

kCombinator :: Boxed -> Boxed -> Boxed

kCombinator x y = x

-- one is a function that returns the value 1# (primitive 1)

one :: PrimInt

one = 1

-- Loopy is a function that takes zero arguments, and tries to return a boxed value.

-- Loopy invokes itself, resulting in an infinite loop, so it does not actually return.

loopy :: Boxed

loopy = loopy

-- main is our program entry point.

-- main takes no arguments, and returns nothing

main :: Void

main = case kCombinator one loopy of -- force K to be evaluated with a `case`

kret -> case kret of -- Force evaluation

i -> printPrimInt i -- Call the forced value of `kret` as `i` and then print it.

A quick explanation about case:

case is a language construct that forces evaluation. In general, no value

is evaluated unless it is forced by a case.

Analysing the example:

The strict interpretation:

If we were coming from a strict world, we would have assumed that the expression

K one loopy would first try to evaluate the arguments, one and loopy.

Evaluating one would return the primitive value 1, so this has no problem.

On trying to evaluate loopy, we would need to re-evaluate loopy, and so on

ad infitum, which would cause this program to never halt.

This is because, as programmers coming from a strict world, we assume that values are evaluated as soon as possible.

So, the output of this program is to have the program infinite-loop for ever, under the strict interpretation.

The non-strict interpretation:

In the non-strict world, we try to evaluate K(1, loopy) since we are asked the result

of it by the case expression. However, we do not try to evaluate loopy, since

no one has asked what it's value is!

Now, we know that

kCombinator x y = x

Therefore,

kCombinator one loopy = one

regardless of what value loopy held.

So, at the case expression:

main = case K(one, loopy) of -- force K to be evaluated with a `case`

>>> kret -> ...

kret = one, we can continue with the computation.

main :: () -> Void

main = case kCombinator one loopy of -- force K to be evaluated with a `case`

kret -> case kret of -- Call the return value of K as `x`, and force evaluation.

>>> i -> printPrimInt i -- Call the vreturn value of `x` as `primx` and then print it.

Here, we force kret (which has value one) to be evaluated with case kret of....

since one = 1, i is bound to the value 1.

Once i is returned, we print it out with printPrimInt(primx).

The output of the program under non-strict interpretation is for it to print out 1.

Where does the difference come from?

Clearly, there is a divide: strict evaluation tells us that this program should never halt. Non-strict evaluation tells us that this program will print an output!

To formalize a notion of strictness, we need a notion of bottom (_|_).

A value is said to be bottom if in trying to evaluate it, we reach an

undefined state. (TODO: refine this, ask ben).

Now, if a function is strict, it would first evaluate its arguments and then

compute the result. So, if a strict function is given a value that is bottom,

the function will try to evaluate the argument, resulting in the computation

screwing up, causing the output of the whole function to be bottom.

Formally, a function f is strict iff (if and only if) f(bottom) = bottom.

Conversely, a non-strict function does not need to evaluate its arguments if it

does not use them, as in the case of K 1 loopy. In this case, f(bottom) need

not be equal to bottom.

Formally, a function f is non-strict iff (if and only if) f(bottom) /= bottom.

As Paul Halmos says, " A good stack of examples, as large as possible, is indispensable for a thorough understanding of any concept, and when I want to learn something new, I make it my first job to build one.". Let us consider some examples.

id

id x = x

id (3) = 1

id (bottom) = bottom

id is strict, since id(bottom) = bottom.

const

const_one x = 1

const_one(bottom) = 1

const_one(3) = 1

const_one is not strict, as const_one(bottom) /= bottom.

K

K x y = x

K 1 2 = 1

K 1 bottom = 1

K bottom 2 = bottom

Note that K(bottom, y) = bottom, so K is strict in its first argument, and

K(x, bottom) /= bottom, so K is non-strict in its second argument.

This is a neat example showing how a function can be strict and lazy in different arguments of the function.

Compiling non-strictness, v1:

How does GHC compile non-strictness?

GHC (the Glasgow haskell compiler) internally uses multiple intermediate

representations, in order of original, to what is finally produced:

- Haskell (the source language)

- Core (a minimal set of constructs to represent the source language)

- STG (Spineless tagless G-machine, a low-level intermediate representation that accurately captures non-strict evaluation)

- C-- (A C-like language with GHC-specific customization to support platform independent code generation).

- Assembly

Here, I will show how to lower simple non-strict programs from a fictitous Core like language down to C , while skipping STG, since it doesn't

really add anything to the high-level discussion at this point.

Our example of compiling non-strictness

Now, we need a strategy to compile the non-strict version of our program.

Clearly, C cannot express laziness directly, so we need some other

mechanism to implement this. I will first code-dump, and then explain as we go along.

Executable repl.it:

Source code

/* a boxed value is a function that can be executed to compute something.

* We make the return value `void` on purpose. This needs to be typecast to a concrete

* Boxed type to get a value out of it: eg, typecast to BoxedInt.

*/

typedef void (*Boxed)();

/* A boxed int, that on evaluation yields an int*/

typedef int (*BoxedInt)();

/* one = 1# */

int one() {

return 1;

}

/* bottom = bottom */

void bottom() {

printf("in function: %s\n", __FUNCTION__);

bottom();

}

/* K x y = x */

Boxed K(Boxed x, Boxed y) {

return x;

}

/*

main :: () -> Void

main = case K(one, loopy) of -- force K to be evaluated with a `case`

kret -> case kret of -- Call the return value of K as `x`, and force evaluation.

i -> printPrimInt(i) -- Call the vreturn value of `x` as `primx` and then print it.

*/

int main() {

Boxed kret = K((Boxed)one, (Boxed)bottom);

int i = (*(BoxedInt)kret)();

printf("%d", i);

return 1;

}

We convert every possibly lazy value into a Boxed value, which is a function pointer that knows how to compute the

underlying value. When the lazy value is forced by a case, we call the Boxed function to compute the output.

This is a straightforward way to encode non-strictness in C. However, do note that this is not lazy, because a value could get recomputed many times. Laziness guarantees that a value is only computed once and is later memoized.

Compiling with a custom call stack / continuations

As one may notice, we currenly use the native call stack every time we force a lazy value. However, in doing so, we might actually run out of stack space, which is undesirable. Haskell programs like to have "deep" chains of values being forced, so we would quite likely run out of stack space.

Therefore, GHC opts to manage its own call stack on the heap. The generated code looks as you would imagine: we maintain a stack of function pointers + auxiliary data ( stack saved values), and we push and pop over this "stack". When we run out of space, we <find correct way to use mmap> to increase our "stack" size.

I've played around with this value a little bit, and have found that the modern stack size is quite large: IIRC, It allowed me to allocate ~26 GB. I believe that the amount it lets you allocate is tied directly to the amount of physical memory + swap you have. I'm not too sure, however. So, for my haskell compiler, sxhc, I am considering cheating and just using the stack directly.

Code for the same example (with the K combinator) is provided here.

Executable repl.it:

Source code

/* a boxed value is a function that can be executed to compute something. */

typedef void (*Boxed)();

/* a return continuation that receives a boxed value. */

typedef void (*BoxedContinuation)(Boxed);

/* A return continuation that receives an int value. */

typedef void (*IntContinuation)(int);

/* Custom stack allocated on the .data section*/

void *stack[STACK_SIZE];

/* Stack pointer */

int sp = 0;

/* Push a continuation `cont` */

void pushContinuation(void *cont) {

assert(sp >= 0);

assert(sp < STACK_SIZE);

stack[sp] = cont;

sp++;

}

/* Pop a continuation frame. */

void *popContinuation() {

assert(sp < STACK_SIZE);

assert(sp >= 0);

sp--;

void *cont = stack[sp];

return cont;

}

/* one = 1# */

void one() {

printf("in function: %s\n", __FUNCTION__);

void *f = popContinuation();

(*(IntContinuation)(f))(1);

}

/* bottom = bottom */

void bottom() {

printf("in function: %s\n", __FUNCTION__);

bottom();

}

/* K x y = x */

void K(Boxed x, Boxed y) {

printf("in function: %s\n", __FUNCTION__);

void *f = popContinuation();

(*(BoxedContinuation)(f))(x);

}

void XForceContinuation(int i) {

printf("in function: %s\n", __FUNCTION__);

printf("%d", i);

}

void KContinuation(Boxed x) {

printf("in function: %s\n", __FUNCTION__);

pushContinuation((void *)XForceContinuation);

x();

}

int main() {

printf("in function: %s\n", __FUNCTION__);

pushContinuation((void *)KContinuation);

K(one, bottom);

return 0;

}

we maintain our own "call stack" of continuations. These continuations are precisely the parts of the code that deal with the return value of a case. ever

case x of

xeval -> expr

compiles to:

pushContinuation(XEvalContinuation);

x()

That is, push a continuation, and then "enter" into x.

One might have a question: does this still not use the call stack? There is a function call at the end of most functions in the source code, so in theory, we are using the call stack, right? The answer is no. It's thanks to a neat optimisation technique called tail call elimination. The observation is that after the call, there is no more code to execute in the caller. So, by playing some stack tricks, one can convert a call to a jump.

Remember, a call instruction uses the stack to setup a stack frame, under the assumption that we will ret at some point.

But, clearly, under our compilation model, we will never ret, simply call more functions. So, we don't need the state maintained by a call. We can simply jmp.

Wrapping up

I hope I've managed to convey the essence of how to compile Haskell. I skipped a couple of things:

- haskell data types: sum and product types. These are straightforward, they just compiled to tagged structs.

letbindings: These too are straightforward, but come with certain retrictions in STG. It's nothing groundbreaking,and is well written in the paper.- Black holes: Currently, we are not truly lazy, in that we do not update values once they are computed.

- GC: how to weave the GC through the computation is somewhat non-trivial.

All of this is documented in the excellent paper: Implementing lazy languages on stock hardware.

Appendix: Non-strict versus Lazy

I have use the word non-strict throughout, and not lazy.

There is a technical difference between the two, which is that:

non-strictis a evaluation order detail that guarantees that values arelazyis one way to implementnon-strict, that provides guarantees that a value will not be evaluated twice.

For example, consider the small haskell snippet:

f :: Int -> Int -> Int

f x y = x * 2

loop :: a

loop = loop

main :: IO ()

main = let a = 10 + 10 print (f a loop)

We expect that the program will never evaluate y=loop because f

does not use y anywhere. We want the program to return the answer:

f 10 loop

= (10 + 10) * (10 + 10)

= 20 * 20

= 400

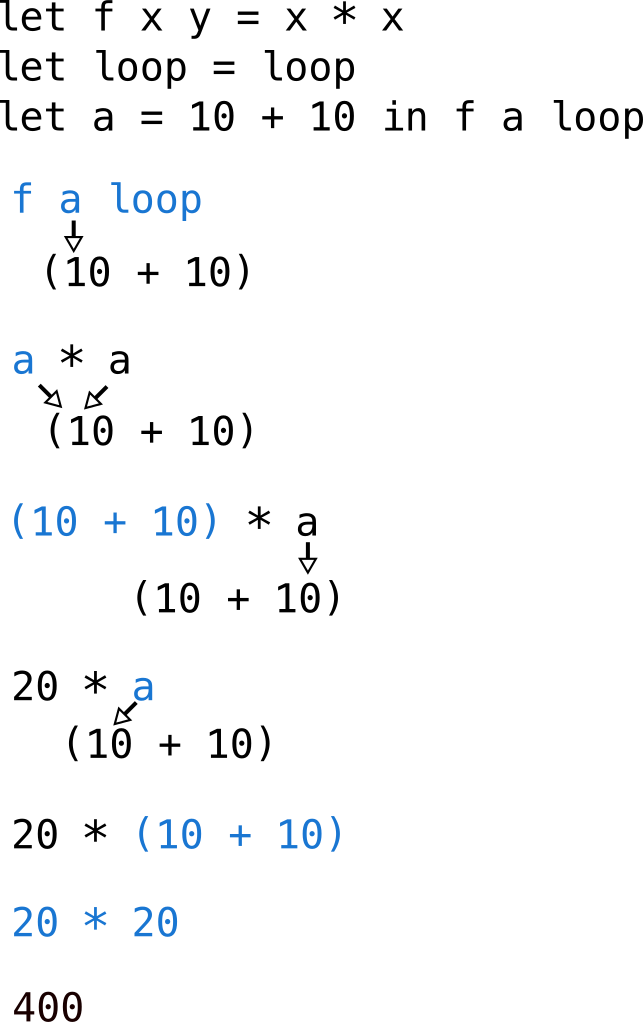

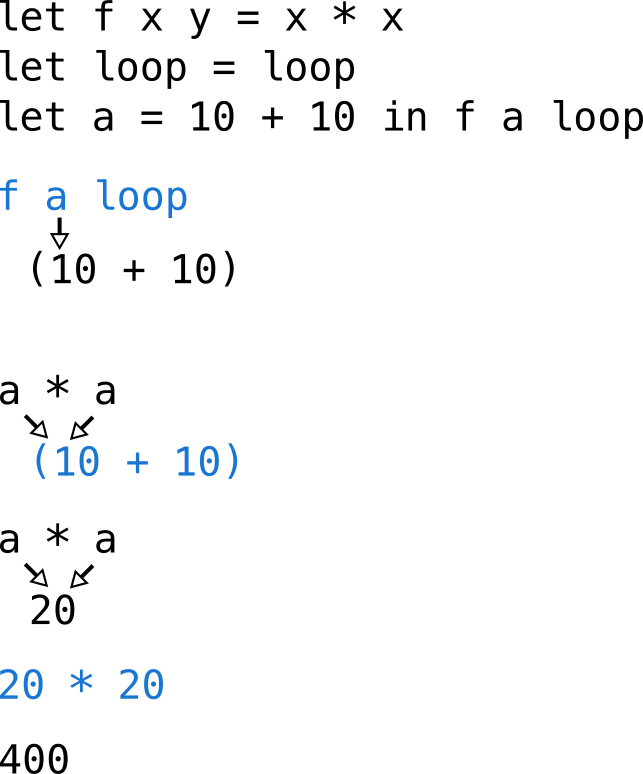

However, we don't really care if the program is evaluated as shown here, using lazy evaluation, (where the blue color indicates what is being evaluated):

a is evaluated twice:

See that both these programs compute the same answer, but do so differently. In the

former case of lazy evaluation the variable a is shared across both occurences: it is computed

once, and the result is reused. In the latter case of non-strict evaluation, we see that

the variable a is evaluated twice independently, once for each occurrence of a.

There are trade-offs with both approaches. In the lazy evaluation case, we are guaranteed

that a large expression will not be evaluated twice. On the flip side, in the case of

non-strict evaluation, since we can evaluate the same expression multiple times, we can

exploit parallelism: Two cores can conceivably compute 10 + 10 in parallel to arrive at 20.

This is in contrast to the lazy evaluation case where this is not allowed, since the compiler

must guarantee that an expression is evaluated at most once. If the same expression 10 + 10

is evaluated on two cores (in parallel), it is still evaluated twice! So there is a trade-off

between guarantees to the end user (that an expression will not be evaluated twice), and

freedom for the compiler (to evaluate in parallel). This is a common theme in language

and compiler design, and one I find very interesting.

WorksHub

Jobs

Locations

Articles

Ground Floor, Verse Building, 18 Brunswick Place, London, N1 6DZ

108 E 16th Street, New York, NY 10003

Subscribe to our newsletter

Join over 111,000 others and get access to exclusive content, job opportunities and more!